ConcurrentHashMap原理浅谈

ConcurrentHashMap原理浅谈

ConcurrentHashMap原理浅谈

以下全部代码都来自jdk1.8源码ConcurrentHashMap.java,其代码量非常多,而且思想和算法非常精妙,所以内容可能会有遗漏或错误,希望大家不吝赐教。最后会在其方法和原理都会与HashMap进行对比。

HashMap原理浅谈

源码阅读一

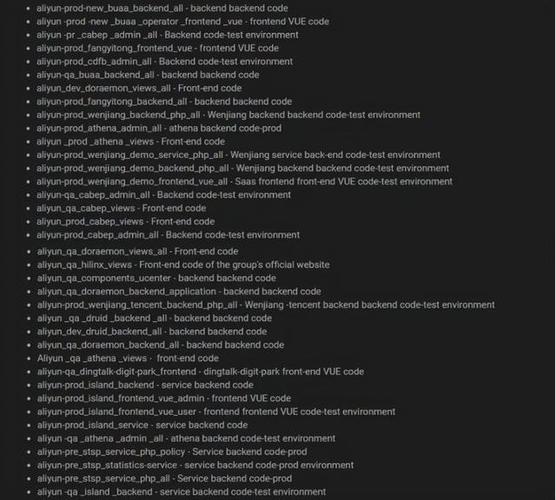

ConcurrentHashMap其中部分源码

private static final int MAXIMUM_CAPACITY = 1 << 30;

private static final int DEFAULT_CAPACITY = 16;

static final int MAX_ARRAY_SIZE = Integer.MAX_VALUE - 8;

private static final int DEFAULT_CONCURRENCY_LEVEL = 16;

private static final float LOAD_FACTOR = 0.75f;

static final int TREEIFY_THRESHOLD = 8;

static final int UNTREEIFY_THRESHOLD = 6;

static final int MIN_TREEIFY_CAPACITY = 64;

private static final int MIN_TRANSFER_STRIDE = 16;

private static int RESIZE_STAMP_BITS = 16;

private static final int MAX_RESIZERS = (1 << (32 - RESIZE_STAMP_BITS)) - 1;

private static final int RESIZE_STAMP_SHIFT = 32 - RESIZE_STAMP_BITS;

...

public ConcurrentHashMap() {

}public ConcurrentHashMap(int initialCapacity) {if (initialCapacity < 0)throw new IllegalArgumentException();int cap = ((initialCapacity >= (MAXIMUM_CAPACITY >>> 1)) ?MAXIMUM_CAPACITY :tableSizeFor(initialCapacity + (initialCapacity >>> 1) + 1));this.sizeCtl = cap;

}public ConcurrentHashMap(Map<? extends K, ? extends V> m) {this.sizeCtl = DEFAULT_CAPACITY;putAll(m);

}public ConcurrentHashMap(int initialCapacity, float loadFactor) {this(initialCapacity, loadFactor, 1);

}public ConcurrentHashMap(int initialCapacity,float loadFactor, int concurrencyLevel) {if (!(loadFactor > 0.0f) || initialCapacity < 0 || concurrencyLevel <= 0)throw new IllegalArgumentException();if (initialCapacity < concurrencyLevel) // Use at least as many binsinitialCapacity = concurrencyLevel; // as estimated threadslong size = (long)(1.0 + (long)initialCapacity / loadFactor);int cap = (size >= (long)MAXIMUM_CAPACITY) ?MAXIMUM_CAPACITY : tableSizeFor((int)size);this.sizeCtl = cap;

}

从ConcurrentHashMap的构造函数来看,初始化数组大小和负载因子是可以指定的,大部分参数与HashMap相同,如默认初始化数组大小, 最大容量和默认负载因子等。

阅读源码二

transient volatile Node<K,V>[] table;

private transient volatile Node<K,V>[] nextTable;

private transient volatile long baseCount;

private transient volatile int sizeCtl;

private transient volatile int transferIndex;

private transient volatile int cellsBusy;

private transient volatile CounterCell[] counterCells;

...

static class Node<K,V> implements Map.Entry<K,V> {final int hash;final K key;volatile V val;volatile Node<K,V> next;Node(int hash, K key, V val, Node<K,V> next) {this.hash = hash;this.key = key;this.val = val;this.next = next;}public final K getKey() { return key; }public final V getValue() { return val; }public final int hashCode() { return key.hashCode() ^ val.hashCode(); }public final String toString(){ return key + "=" + val; }public final V setValue(V value) {throw new UnsupportedOperationException();}public final boolean equals(Object o) {Object k, v, u; Map.Entry<?,?> e;return ((o instanceof Map.Entry) &&(k = (e = (Map.Entry<?,?>)o).getKey()) != null &&(v = e.getValue()) != null &&(k == key || k.equals(key)) &&(v == (u = val) || v.equals(u)));}/*** Virtualized support for map.get(); overridden in subclasses.*/Node<K,V> find(int h, Object k) {Node<K,V> e = this;if (k != null) {do {K ek;if (e.hash == h &&((ek = e.key) == k || (ek != null && k.equals(ek))))return e;} while ((e = e.next) != null);}return null;}

}

...

static final class TreeNode<K,V> extends Node<K,V> {TreeNode<K,V> parent; // red-black tree linksTreeNode<K,V> left;TreeNode<K,V> right;TreeNode<K,V> prev; // needed to unlink next upon deletionboolean red;TreeNode(int hash, K key, V val, Node<K,V> next,TreeNode<K,V> parent) {super(hash, key, val, next);this.parent = parent;}Node<K,V> find(int h, Object k) {return findTreeNode(h, k, null);}/*** Returns the TreeNode (or null if not found) for the given key* starting at given root.*/final TreeNode<K,V> findTreeNode(int h, Object k, Class<?> kc) {if (k != null) {TreeNode<K,V> p = this;do {int ph, dir; K pk; TreeNode<K,V> q;TreeNode<K,V> pl = p.left, pr = p.right;if ((ph = p.hash) > h)p = pl;else if (ph < h)p = pr;else if ((pk = p.key) == k || (pk != null && k.equals(pk)))return p;else if (pl == null)p = pr;else if (pr == null)p = pl;else if ((kc != null ||(kc = comparableClassFor(k)) != null) &&(dir = compareComparables(kc, k, pk)) != 0)p = (dir < 0) ? pl : pr;else if ((q = pr.findTreeNode(h, k, kc)) != null)return q;elsep = pl;} while (p != null);}return null;}

}

从代码可以看出,底层是由数组+链表+树构成,与HashMap相同,不同之处是实现方法和volatile关键字。

为什么要用volatile关键字?

volatile的作用是:线程在每次使用变量的时候,都会读取变量修改后的最的值,就是可见性。concurrenthashmap会用到多线程,所以肯定要用到。

sizeCtl关键变量:

sizeCtl为0,代表数组未初始化, 且数组的初始容量为16

sizeCtl为正数,如果数组未初始化,那么其记录的是数组的初始容量,如果数组已经初始化,那么其记录的是数组的扩容阈值

sizeCtl为-1,表示数组正在进行初始化

sizeCtl小于0,并且不是-1,表示数组正在扩容, -(1+n),表示此时有n个线程正在共同完成数组的扩容操作

阅读源码三

这是代码中,最为重要的三个原子性操作

// 获取主内存中的元素

static final <K,V> Node<K,V> tabAt(Node<K,V>[] tab, int i) {return (Node<K,V>)U.getObjectVolatile(tab, ((long)i << ASHIFT) + ABASE);

}

// cas自旋添加元素,成功true,失败false

static final <K,V> boolean casTabAt(Node<K,V>[] tab, int i,Node<K,V> c, Node<K,V> v) {return U.compareAndSwapObject(tab, ((long)i << ASHIFT) + ABASE, c, v);

}static final <K,V> void setTabAt(Node<K,V>[] tab, int i, Node<K,V> v) {U.putObjectVolatile(tab, ((long)i << ASHIFT) + ABASE, v);

}

阅读源码四

public V put(K key, V value) {return putVal(key, value, false);

}/** Implementation for put and putIfAbsent */

final V putVal(K key, V value, boolean onlyIfAbsent) {// 不允许空键空值if (key == null || value == null) throw new NullPointerException();int hash = spread(key.hashCode());// 计算出索引int binCount = 0;for (Node<K,V>[] tab = table;;) {// 赋值进行循环Node<K,V> f; int n, i, fh;if (tab == null || (n = tab.length) == 0)// 如数组本来就是空的,就要初始化数组tab = initTable();else if ((f = tabAt(tab, i = (n - 1) & hash)) == null) {if (casTabAt(tab, i, null,new Node<K,V>(hash, key, value, null)))break; // no lock when adding to empty bin}// 如果在扩容,则帮助扩容else if ((fh = f.hash) == MOVED)tab = helpTransfer(tab, f);else {V oldVal = null;// 如果hash冲突或key存在,则对节点上锁synchronized (f) {if (tabAt(tab, i) == f) {if (fh >= 0) {binCount = 1;for (Node<K,V> e = f;; ++binCount) {K ek;if (e.hash == hash &&((ek = e.key) == key ||(ek != null && key.equals(ek)))) {oldVal = e.val;if (!onlyIfAbsent)e.val = value;break;}Node<K,V> pred = e;if ((e = e.next) == null) {pred.next = new Node<K,V>(hash, key,value, null);break;}}}else if (f instanceof TreeBin) {Node<K,V> p;binCount = 2;if ((p = ((TreeBin<K,V>)f).putTreeVal(hash, key,value)) != null) {oldVal = p.val;if (!onlyIfAbsent)p.val = value;}}}}if (binCount != 0) {if (binCount >= TREEIFY_THRESHOLD)treeifyBin(tab, i);if (oldVal != null)return oldVal;break;}}}addCount(1L, binCount);return null;

}private final Node<K,V>[] initTable() {Node<K,V>[] tab; int sc;// cas+自旋,保证线程安全,对数组进行初始化操作while ((tab = table) == null || tab.length == 0) {// 表示正在初始化或是扩容if ((sc = sizeCtl) < 0)// 让出cpu的使用Thread.yield(); // lost initialization race; just spin// 一直自旋等待cpu的使用权, 如果成功赋值,就可以else if (U.compareAndSwapInt(this, SIZECTL, sc, -1)) {try {// 双重检测if ((tab = table) == null || tab.length == 0) {int n = (sc > 0) ? sc : DEFAULT_CAPACITY;@SuppressWarnings("unchecked")Node<K,V>[] nt = (Node<K,V>[])new Node<?,?>[n];table = tab = nt;// 计算扩容阈值,并赋值给scsc = n - (n >>> 2);}} finally {sizeCtl = sc;}break;}}return tab;}

一、初始化数组

- new了一个新的对象,第一次放入k-v,就要进行初始化数组。

- 假设有线程A和线程B同时进行put操作,那样就同时进入initTable;那样就可能产生两个数组;所以要进行sizeCtl判断,如果<0,则代表已经有现在线程在初始化数组,就要让出cpu的使用权。

- 有线程抢占进入方法之前,会cas自旋将sizeCtl改为-1,以便让后面的线程放弃cpu使用权。

- 自旋成功之后,还要检查一次数组有没有人初始化,如果没有才初始化

二、放入数据

根据key计算hash值,判断此hash值索引的数组位置是否为空,所有放入数据有两种情况;

1、为空

- 直接添加一个Node元素

- 中途有判断是否正在扩容,有则帮助扩容helpTransfer

- 进入addCount,判断是否需要进行扩容

2、不为空

- 中途有判断是否正在扩容,有则帮助扩容helpTransfer

- 将首个元素加锁synchronized,理论上可以同时有(数组长度*0.75)个线程操作;抛弃了将整个数组加锁,影响性能;

- 链表的长度超过8,转红黑树

- 进入addCount,判断是否需要进行扩容或帮助扩容

阅读源码五

private final void addCount(long x, int check) {CounterCell[] as; long b, s;//当CounterCell数组不为空,则优先利用数组中的CounterCell记录数量//或者当baseCount的累加操作失败,会利用数组中的CounterCell记录数量if ((as = counterCells) != null ||!U.compareAndSwapLong(this, BASECOUNT, b = baseCount, s = b + x)) {CounterCell a; long v; int m;boolean uncontended = true;if (as == null || (m = as.length - 1) < 0 ||(a = as[ThreadLocalRandom.getProbe() & m]) == null ||!(uncontended =U.compareAndSwapLong(a, CELLVALUE, v = a.value, v + x))) {fullAddCount(x, uncontended);return;}if (check <= 1)return;s = sumCount();}if (check >= 0) {Node<K,V>[] tab, nt; int n, sc;while (s >= (long)(sc = sizeCtl) && (tab = table) != null &&(n = tab.length) < MAXIMUM_CAPACITY) {// 当前操作的标识int rs = resizeStamp(n);// 正在扩容if (sc < 0) {// 双重判断扩容结束与否if ((sc >>> RESIZE_STAMP_SHIFT) != rs || sc == rs + 1 ||sc == rs + MAX_RESIZERS || (nt = nextTable) == null ||transferIndex <= 0)break;// 帮忙扩容if (U.compareAndSwapInt(this, SIZECTL, sc, sc + 1))transfer(tab, nt);}// 达到扩容条件else if (U.compareAndSwapInt(this, SIZECTL, sc,(rs << RESIZE_STAMP_SHIFT) + 2))// 扩容transfer(tab, null);s = sumCount();}}}

阅读源码六

private final void transfer(Node<K,V>[] tab, Node<K,V>[] nextTab) {int n = tab.length, stride;if ((stride = (NCPU > 1) ? (n >>> 3) / NCPU : n) < MIN_TRANSFER_STRIDE)// 一个线程最少要完成16位的迁移stride = MIN_TRANSFER_STRIDE; // subdivide rangeif (nextTab == null) { // initiatingtry {// 创建一个新的两倍数组,n<<1@SuppressWarnings("unchecked")Node<K,V>[] nt = (Node<K,V>[])new Node<?,?>[n << 1];nextTab = nt;} catch (Throwable ex) { // try to cope with OOMEsizeCtl = Integer.MAX_VALUE;return;}nextTable = nextTab;// 转移下标,由后向前转移transferIndex = n;}// 新数组的长度int nextn = nextTab.length;//ForwardingNode<K,V> fwd = new ForwardingNode<K,V>(nextTab);boolean advance = true;boolean finishing = false; // to ensure sweep before committing nextTabfor (int i = 0, bound = 0;;) {Node<K,V> f; int fh;while (advance) {int nextIndex, nextBound;if (--i >= bound || finishing)advance = false;else if ((nextIndex = transferIndex) <= 0) {i = -1;advance = false;}else if (U.compareAndSwapInt(this, TRANSFERINDEX, nextIndex,nextBound = (nextIndex > stride ?nextIndex - stride : 0))) {// 根据步长划分段bound = nextBound;i = nextIndex - 1;advance = false;}}if (i < 0 || i >= n || i + n >= nextn) {int sc;if (finishing) {nextTable = null;table = nextTab;sizeCtl = (n << 1) - (n >>> 1);return;}if (U.compareAndSwapInt(this, SIZECTL, sc = sizeCtl, sc - 1)) {if ((sc - 2) != resizeStamp(n) << RESIZE_STAMP_SHIFT)return;finishing = advance = true;i = n; // recheck before commit}}else if ((f = tabAt(tab, i)) == null)advance = casTabAt(tab, i, null, fwd);else if ((fh = f.hash) == MOVED)advance = true; // already processedelse {synchronized (f) {if (tabAt(tab, i) == f) {// ln低位,hn高位Node<K,V> ln, hn;if (fh >= 0) {int runBit = fh & n;Node<K,V> lastRun = f;for (Node<K,V> p = f.next; p != null; p = p.next) {int b = p.hash & n;if (b != runBit) {runBit = b;lastRun = p;}}if (runBit == 0) {ln = lastRun;hn = null;}else {hn = lastRun;ln = null;}for (Node<K,V> p = f; p != lastRun; p = p.next) {int ph = p.hash; K pk = p.key; V pv = p.val;if ((ph & n) == 0)ln = new Node<K,V>(ph, pk, pv, ln);elsehn = new Node<K,V>(ph, pk, pv, hn);}setTabAt(nextTab, i, ln);setTabAt(nextTab, i + n, hn);setTabAt(tab, i, fwd);advance = true;}else if (f instanceof TreeBin) {TreeBin<K,V> t = (TreeBin<K,V>)f;TreeNode<K,V> lo = null, loTail = null;TreeNode<K,V> hi = null, hiTail = null;int lc = 0, hc = 0;for (Node<K,V> e = t.first; e != null; e = e.next) {int h = e.hash;TreeNode<K,V> p = new TreeNode<K,V>(h, e.key, e.val, null, null);if ((h & n) == 0) {if ((p.prev = loTail) == null)lo = p;elseloTail.next = p;loTail = p;++lc;}else {if ((p.prev = hiTail) == null)hi = p;elsehiTail.next = p;hiTail = p;++hc;}}ln = (lc <= UNTREEIFY_THRESHOLD) ? untreeify(lo) :(hc != 0) ? new TreeBin<K,V>(lo) : t;hn = (hc <= UNTREEIFY_THRESHOLD) ? untreeify(hi) :(lc != 0) ? new TreeBin<K,V>(hi) : t;setTabAt(nextTab, i, ln);setTabAt(nextTab, i + n, hn);setTabAt(tab, i, fwd);advance = true;}}}}}}

扩容过程:

- 线程判断,根据扩展数组划分线程工作,每个现在至少要转移16个元素

- 创建一个原数组两倍的新数组(n>>1)

- 计算多线程工作的索引

- 开始转移,加锁头结点,其他线程只能排队处理,阻塞

- 计算元素的高低位,并新增两条链表分别转移到新数组

- 重复直到转移完成

为什么要多线程扩容?

如果进行了多次扩容后,就是2^4+扩容次数,数组会非常大,数组转移工作量大,需要多线程完成,但是前提数据不能乱。

所以需要分段进行迁移,并规定每个线程工作不少于MIN_TRANSFER_STRIDE=16

图解

put的过程

主要通过两个方式保证线程安全:CAS机制和synchronization

什么是CAS机制

欢迎到这篇文章交流

扩容的过程

扩容过程:

- 如果达到了扩容条件,创建一个新的数组,大小为原数组的2倍(n>>1);然后线程划分,一个线程负责16个元素

- 根据公式,可以计算出0低位和1高位

- 建立出0和1两条链表

- 0这条链表放在新数组的原下标位置,1这条链表放在新数组的(原下标+16)位置

- 如果线程1和线程2的两个桶完成了转移,原数组的位置放入fwd表示完成转移,不可以放入任何元素,阻塞等到新数组完成后,再放入元素;如果线程3没有fwd,则可以继续放入元素在这个原数组。

总结

- ConcurrentHashMap是线程安全

- 底层的数据结构是数组+链表+红黑树,与hashmap相同

- 线程安全是底层用了大量的synchronization锁和CAS机制赋值,所以效率会有所下降。

- 扩容机制是线程安全的,并使用了synchronization锁和CAS机制,所以效率会下降。同时采用了多线程,每个线程负责的转移数量不少于16,并且在put的过程中,有遇到正在扩容的,也会帮忙扩容。

PS:内容有错,多多指教!!!

- 位图文件(BMP)格式分析以及程序实现

- 如何理解AQS

- dubbo

- 功能安全软件架构

- 深度学习怎么跑代码?

- SQLyog 64位破解版 v12.09

- CStdioFile类

- java过滤器filter过滤相同url时的执行顺序

- NFS存储服务器

- c

- 如何在安卓安装LINUX

- c#打开数据库连接池的工作机制

- EmguCV学习(三)

- TensorFlow Lite 开发手册(5)——TensorFlow Lite模型使用实例(分类模型)

- pta 6

- lxml简明教程

- deflate树与deflate编码

- nyoj82(迷宫寻宝)

- android 6.0 logcat机制(一)java层写log,logd接受log

- JScript.NET或者JScript是什么?